Debian OS インストール時にソフトウェアRAID1(ミラータイプ)を設定する。

Debian の RAID はディスク単位ではなく、パーティション単位。(同じディスク内に複数のパーティションを切ってRAIDを組むことも可能なはず。やる意味は無さそうだけど)

武藤 健志 著 / Debian GNU/Linux 徹底入門 第3版 Sarge対応 の616ページに詳しい方法が載っているので、それを参考に設定してみた。

使用するPCは ドスパラ Prime A Lightning AM のスペックを Debian etch 上で確認 で使ったもの。

インストーラは AMD64アーキテクチャ用 Debian GNU/Linux 4.0r1 (etch) netinst CD amd64

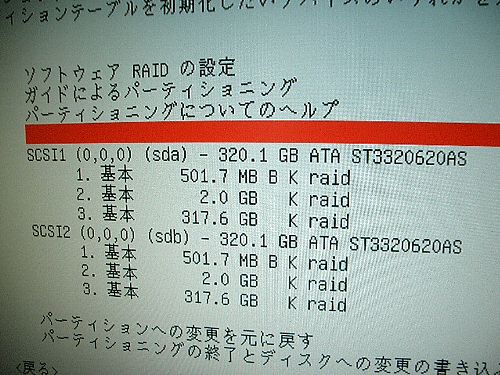

HDDとRAID設定レイアウト

今回、HDDは同じタイプのもの(Seagate ST3320620AS 320GB)を2台使う。

HDD1台目: sda1

HDD2台目: sda2

RAID1の設定レイアウトはこんな感じ。

| マウントポイント | 基本/論理 | 容量 | ファイルシステム | SCSIパーティション | RAIDパーティション |

|---|---|---|---|---|---|

| /boot | 基本パーティション | 500MB | ext3 | sda1, sdb1 | md0 |

| swap | 基本パーティション | 2.0GB | swap | sda2, sdb2 | md1 |

| / | 基本パーティション | 317.6GB | ext3 | sda3, sdb3 | md2 |

インストーラでのパーティショニング設定

パーティションニングは手動で設定する。

「ディスクのパーティショニング」の画面でsdaとsdbの2台のハードディスクをパーティショニングする。

一応 /boot だけは分けておく。何かあったとき対処しやすそうだから。

/boot になるパーティションはsda1もsdb1でも「起動フラグ」をONにしておく(必要かどうかわからないが)。

すべてのパーティションの「利用方法」を「RAIDの物理ボリューム」に設定しておく。

パーティションを切ったら、「ソフトウェアRAIDの設定」を選ぶ。

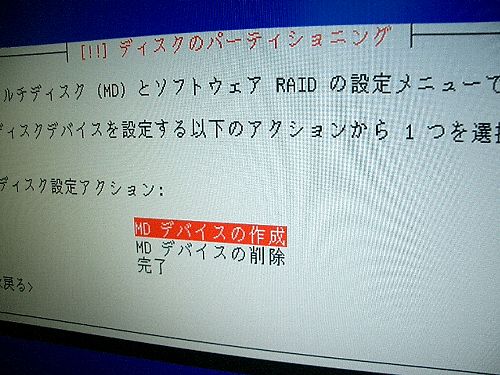

「MDデバイスの作成」を選ぶ。(MDはマルチディスクの略)

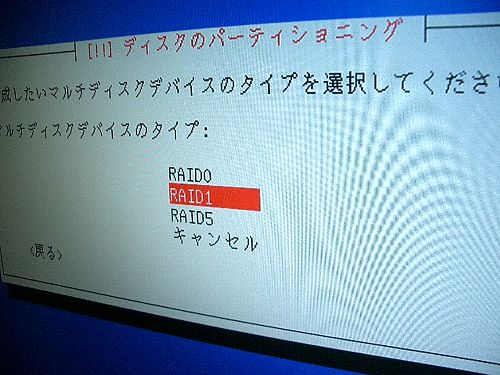

「RAID1」を選ぶ。

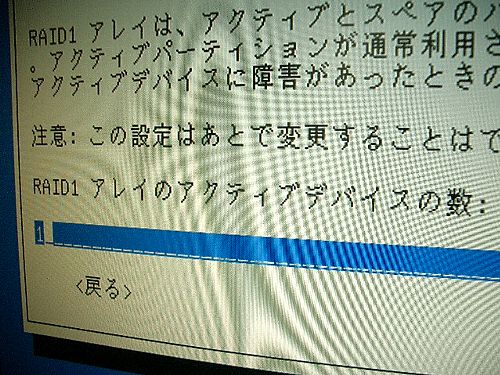

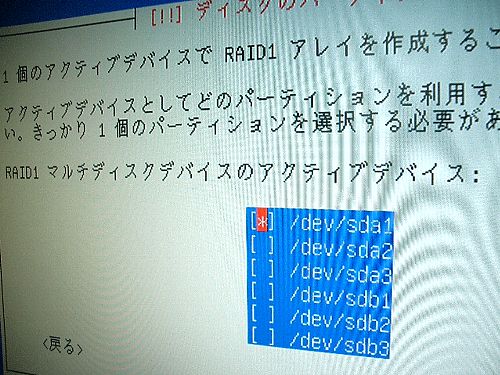

「RAID1アレイのアクティブデバイスの数」に「1」を入力する。

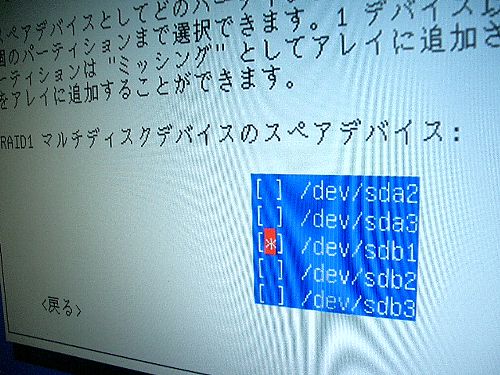

「RAID1アレイのスペアデバイスの数」に「1」を入力する。

* これは失敗。後で、mdadm コマンドでアクティブデバイスの数を2に変更した。

「RAID1マルチディスクデバイスのアクティブデバイス」に「/dev/sda1」を選択する。

「RAID1マルチディスクデバイスのスペアデバイス」に「/dev/sdb1」を選択する。

同様にあと2回「MDデバイスの作成」を行う。

/dev/sda2 と /dev/sdb2 で 1つのMDデバイスを作成。

/dev/sda3 と /dev/sdb3 で 1つのMDデバイスを作成。

これで、アクティブデバイスがイカレたときには、スペアデバイスをアクティブデバイスにすることで復活できる?

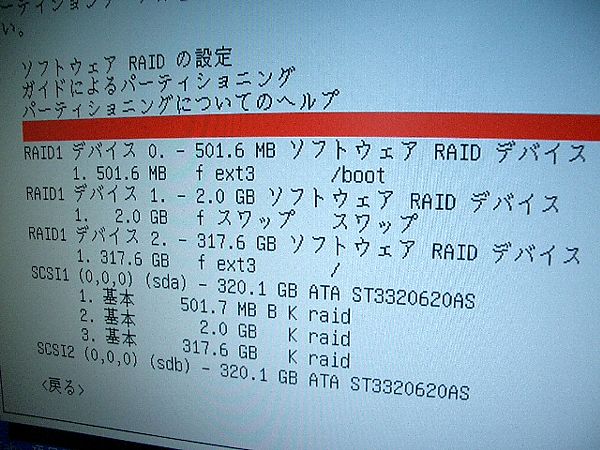

結果、こんな感じになる。

/dev/sda1 + /dev/sdb1 => /dev/md0 (/boot)

/dev/sda2 + /dev/sdb2 => /dev/md1 (swap)

/dev/sda3 + /dev/sdb3 => /dev/md2 (/)

その後、ベースシステムのインストールやらGRUBブートローダーのインストールを経て、無事に再起動できた。

インストール後の状態

システム情報を表示してみる。

# uname -a

Linux hogehoge 2.6.18-5-amd64 #1 SMP Thu May 31 23:51:05 UTC 2007 x86_64 GNU/Linux

# df -ha

Filesystem Size Used Avail Use% Mounted on

/dev/md2 292G 725M 276G 1% /

tmpfs 943M 0 943M 0% /lib/init/rw

proc 0 0 0 - /proc

sysfs 0 0 0 - /sys

procbususb 0 0 0 - /proc/bus/usb

udev 10M 68K 10M 1% /dev

tmpfs 943M 0 943M 0% /dev/shm

devpts 0 0 0 - /dev/pts

/dev/md0 464M 18M 422M 5% /boot

# cat /proc/scsi/scsi

Attached devices:

Host: scsi0 Channel: 00 Id: 00 Lun: 00

Vendor: ATA Model: ST3320620AS Rev: 3.AA

Type: Direct-Access ANSI SCSI revision: 05

Host: scsi1 Channel: 00 Id: 00 Lun: 00

Vendor: ATA Model: ST3320620AS Rev: 3.AA

Type: Direct-Access ANSI SCSI revision: 05

Host: scsi2 Channel: 00 Id: 00 Lun: 00

Vendor: HL-DT-ST Model: DVDRAM GSA-H62N Rev: CL00

Type: CD-ROM ANSI SCSI revision: 05

# ls /dev | grep sda

sda

sda1

sda2

sda3

# ls /dev | grep sdb

sdb

sdb1

sdb2

sdb3

# ls /dev | grep md

md

md0

md1

md2

# fdisk /dev/sda -l

Disk /dev/sda: 320.0 GB, 320072933376 bytes

255 heads, 63 sectors/track, 38913 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 * 1 61 489951 fd Linux raid autodetect

/dev/sda2 62 304 1951897+ fd Linux raid autodetect

/dev/sda3 305 38913 310126792+ fd Linux raid autodetect

# fdisk /dev/sdb -l

Disk /dev/sdb: 320.0 GB, 320072933376 bytes

255 heads, 63 sectors/track, 38913 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 * 1 61 489951 fd Linux raid autodetect

/dev/sdb2 62 304 1951897+ fd Linux raid autodetect

/dev/sdb3 305 38913 310126792+ fd Linux raid autodetect

# cat /proc/mdstat

Personalities : [raid1]

md2 : active raid1 sda3[0] sdb3[1](S)

310126720 blocks [1/1] [U]

md1 : active raid1 sda2[0] sdb2[1](S)

1951808 blocks [1/1] [U]

md0 : active raid1 sda1[0] sdb1[1](S)

489856 blocks [1/1] [U]

ちょっと気になるのが、scsi0 と scsi1 が HDD で、scsi2 が DVDドライブになっているところ。

マザーボードのSATA1とSATA3にHDDを接続してSATA2にDVDドライブを接続してあるので、scsi0とscsi2がHDDになるもんだと思ってた。要注意?

ここまでは失敗例だった……

スペアデバイスは自動でアクティブになってくれるわけではなさそう orz

アクティブデバイスが1個しかないので、今のままだと

アクティブがクラッシュ -> システム停止 -> スペアをアクティブに手動で設定 -> システム復活

となりそう。

アクティブデバイスを1個から2個にする

Debian etch で RAID を設定/実現しているのは mdadm というコマンドらしい。

# mdadm --help

では --grow の説明はありませんでしたが、

# mdadm --grow --help

だと出てきました。

隠しオプションか何かでしょうか。

以下の手順でアレイの数を増やすことができました。(元は2個です)

# mdadm --grow /dev/md0 -n3

# mdadm /dev/md0 -a /dev/hda1

[debian-users:45326] Re: RAIDでスペアドライブを追加する方法?

man mdadm によると

-G, --grow

Change the size or shape of an active array.

あと GROW MODE というセクションにも説明あり。

簡易的なものは mdadm --grow --help がわかりやすい。

# mdadm --grow --help

Usage: mdadm --grow device options

This usage causes mdadm to attempt to reconfigure a running array.

This is only possibly if the kernel being used supports a particular

reconfiguration. This version only supports changing the number of

devices in a RAID1, and changing the active size of all devices in

a RAID1/4/5/6.

Options that are valid with the grow (-G --grow) mode are:

--size= -z : Change the active size of devices in an array.

: This is useful if all devices have been replaced

: with larger devices.

--raid-disks= -n : Change the number of active devices in a RAID1

: array.

さっそく、アクティブデバイスを2個にしてみる。

# mdadm --grow /dev/md0 -n2

# mdadm --grow /dev/md1 -n2

# mdadm --grow /dev/md2 -n2

cat /proc/mdstat してみると……

# cat /proc/mdstat

Personalities : [raid1]

md2 : active raid1 sda3[0] sdb3[2]

310126720 blocks [2/1] [U_]

resync=DELAYED

md1 : active raid1 sda2[0] sdb2[2]

1951808 blocks [2/1] [U_]

[==========>..........] recovery = 50.6% (989888/1951808) finish=0.2min speed=76145K/sec

md0 : active raid1 sda1[0] sdb1[1]

489856 blocks [2/2] [UU]

unused devices: <none>

md0 はアクティブデバイスは2個になっていた。

なんか、recovery とか出てて md1 が処理中な雰囲気。

しばらく待ってから、再度 cat /proc/mdstat してみると……

# cat /proc/mdstat

Personalities : [raid1]

md2 : active raid1 sda3[0] sdb3[2]

310126720 blocks [2/1] [U_]

[>....................] recovery = 0.0% (57536/310126720) finish=89.7min speed=57536K/sec

md1 : active raid1 sda2[0] sdb2[1]

1951808 blocks [2/2] [UU]

md0 : active raid1 sda1[0] sdb1[1]

489856 blocks [2/2] [UU]

unused devices: <none>

今度は md2 が処理中な雰囲気。

「finish=89.7min」 って (^_^; あと1時間以上かかるのか……

っていうか、OSを動作させつつ、RAIDのスペアをアクテイブにできるのはなかなかありがたい。

# HDD交換時はさすがに電源停止だけど。

そして、1時間30分ぐらい待ったら、

# cat /proc/mdstat

Personalities : [raid1]

md2 : active raid1 sda3[0] sdb3[1]

310126720 blocks [2/2] [UU]

md1 : active raid1 sda2[0] sdb2[1]

1951808 blocks [2/2] [UU]

md0 : active raid1 sda1[0] sdb1[1]

489856 blocks [2/2] [UU]

unused devices: <none>

recovery 表示が消えてた。処理完了。

mdadm --detail で詳細情報も表示してみる。

# mdadm --detail /dev/md0

/dev/md0:

Version : 00.90.03

Creation Time : Mon Sep 24 00:55:38 2007

Raid Level : raid1

Array Size : 489856 (478.46 MiB 501.61 MB)

Device Size : 489856 (478.46 MiB 501.61 MB)

Raid Devices : 2

Total Devices : 2

Preferred Minor : 0

Persistence : Superblock is persistent

Update Time : Mon Sep 24 07:00:59 2007

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

UUID : 8bba7643:fd066bd7:c4dd6cdd:ed8a149f

Events : 0.8

Number Major Minor RaidDevice State

0 8 1 0 active sync /dev/sda1

1 8 17 1 active sync /dev/sdb1

# mdadm --detail /dev/md1

/dev/md1:

Version : 00.90.03

Creation Time : Mon Sep 24 00:55:52 2007

Raid Level : raid1

Array Size : 1951808 (1906.38 MiB 1998.65 MB)

Device Size : 1951808 (1906.38 MiB 1998.65 MB)

Raid Devices : 2

Total Devices : 2

Preferred Minor : 1

Persistence : Superblock is persistent

Update Time : Mon Sep 24 07:07:07 2007

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

UUID : 142686ef:43298491:b6bdce52:8a7fc717

Events : 0.6

Number Major Minor RaidDevice State

0 8 2 0 active sync /dev/sda2

1 8 18 1 active sync /dev/sdb2

# mdadm --detail /dev/md2

/dev/md2:

Version : 00.90.03

Creation Time : Mon Sep 24 00:56:01 2007

Raid Level : raid1

Array Size : 310126720 (295.76 GiB 317.57 GB)

Device Size : 310126720 (295.76 GiB 317.57 GB)

Raid Devices : 2

Total Devices : 2

Preferred Minor : 2

Persistence : Superblock is persistent

Update Time : Mon Sep 24 08:31:35 2007

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 0

UUID : 9705e4d8:ff326a8a:0820ec24:30988c0c

Events : 0.152

Number Major Minor RaidDevice State

0 8 3 0 active sync /dev/sda3

1 8 19 1 active sync /dev/sdb3

勘違いの原因?

ほぼ同じサイズの 2 台のデバイスを用意します、お互いにミラーしたいわけ

です。できればもう 1 台デバイスを用意しましょう。それを待機用の予備

ディスクとします。予備ディスクはアクティブなデバイスが壊れた時に、自動

的にミラーを補ってくれます。

The Software-RAID HOWTO: RAID セットアップ: 4.4 RAID-1

とあるので、アクティブ1つとスペア1つにしておけば、アクティブがクラッシュしたときに、スペアが自動的にアクティブになってくれるのかと思ってた。

でも、その文章の直後の設定内容を見ると、

raiddev /dev/md0

raid-level 1

nr-raid-disks 2

nr-spare-disks 0

chunk-size 4

persistent-superblock 1

device /dev/sdb6

raid-disk 0

device /dev/sdc5

raid-disk 1

スペア(nr-spare-disks)は0で、nr-raid-disks というのが2になっている……

やっぱり、アクティブを2にしろということか。

追記 2007-09-24: どちらのHDDからでも起動するようにする(grubの設定)

sda の HDD を BIOS で無効にしてみたら起動してくれなくなった。

つまりこのままでは sda の障害時には起動しなくなるということ。

grub で sdb の MBR(マスターブートレコード) からも起動ができるように設定する。

grub コマンドを入力し、その後に設定内容を入力していく。

grub の諸情報は /boot/grub/stage1, /boot/grub/stage2, /boot/grub/menu.lst と存在。

マウントポイントが /boot なので 設定する際には /boot 部分を無視して記述する (install /grub/stage1 (hd0) /grub/stage2 p /grub/menu.lst)。

マウントポイントが / のときは /boot 部分も記述する (install /boot/grub/stage1 (hd0) /boot/grub/stage2 p /boot/grub/menu.lst)。

入力内容はこんな感じ。

# grub

device (hd0) /dev/sdb

root (hd0,0)

install /grub/stage1 (hd0) /grub/stage2 p /grub/menu.lst

quit

実際の設定の流れ。

# grub

Probing devices to guess BIOS drives. This may take a long time.

GNU GRUB version 0.97 (640K lower / 3072K upper memory)

[ Minimal BASH-like line editing is supported. For

the first word, TAB lists possible command

completions. Anywhere else TAB lists the possible

completions of a device/filename. ]

grub> device (hd0) /dev/sdb

device (hd0) /dev/sdb

grub> root (hd0,0)

root (hd0,0)

Filesystem type is ext2fs, partition type 0xfd

grub> install /grub/stage1 (hd0) /grub/stage2 p /grub/menu.lst

install /grub/stage1 (hd0) /grub/stage2 p /grub/menu.lst

grub> quit

これで、sda の HDD を BIOS で無効にしても起動するようになった。

もちろん、sdb の HDD を BIOS で無効にしても起動する。

これで起動の問題は片付いたが、なぜか無効にした HDD に対する警告メールとかは来なかった……

参考

- Linux/Debian/Software_RAID - www.hanecci.com

- M.C.P.C.: Fedora Core 2/3 では、RAID(md)の管理はmdadmを使います。

- ソフトウェアRAIDでのインストール

- RAID 1 でのドライブ障害に対処する (Linux 2.6.xx + mdadm) - IT Memo

- urekatのスカンク日記3 - なんだインストーラで設定できるのか

- Sarge RAIDの修復 (Sarge RAID1設定でハードディスク交換)

- Debian初心者のA2Z -8- ソフトウェア RAID

- はてなブックマーク - NI-Lab.の電子栞 / linux_raid

mdadm のマニュアル

簡単に参照できるように、ここに mdadm のマニュアルをテキスト化しておく。# ついでに和訳を mdadm(8) ver.1.5 man page [日本語] に発見。

# man mdadm | col -bfx > mdadm.txt

Reformatting mdadm(8), please wait...

# cat mdadm.txt

MDADM(8) MDADM(8)

NAME

mdadm - manage MD devices aka Linux Software Raid.

SYNOPSIS

mdadm [mode] <raiddevice> [options] <component-devices>

DESCRIPTION

RAID devices are virtual devices created from two or more real block

devices. This allows multiple devices (typically disk drives or parti-

tions there-of) to be combined into a single device to hold (for exam-

ple) a single filesystem. Some RAID levels include redundancy and so

can survive some degree of device failure.

Linux Software RAID devices are implemented through the md (Multiple

Devices) device driver.

Currently, Linux supports LINEAR md devices, RAID0 (striping), RAID1

(mirroring), RAID4, RAID5, RAID6, RAID10, MULTIPATH, and FAULTY.

MULTIPATH is not a Software RAID mechanism, but does involve multiple

devices. For MULTIPATH each device is a path to one common physical

storage device.

FAULTY is also not true RAID, and it only involves one device. It pro-

vides a layer over a true device that can be used to inject faults.

MODES

mdadm has 7 major modes of operation:

Assemble

Assemble the parts of a previously created array into an active

array. Components can be explicitly given or can be searched

for. mdadm checks that the components do form a bona fide

array, and can, on request, fiddle superblock information so as

to assemble a faulty array.

Build Build an array that doesn't have per-device superblocks. For

these sorts of arrays, mdadm cannot differentiate between ini-

tial creation and subsequent assembly of an array. It also can-

not perform any checks that appropriate devices have been

requested. Because of this, the Build mode should only be used

together with a complete understanding of what you are doing.

Create Create a new array with per-device superblocks.

Follow or Monitor

Monitor one or more md devices and act on any state changes.

This is only meaningful for raid1, 4, 5, 6, 10 or multipath

arrays as only these have interesting state. raid0 or linear

never have missing, spare, or failed drives, so there is nothing

to monitor.

Grow Grow (or shrink) an array, or otherwise reshape it in some way.

Currently supported growth options including changing the active

size of component devices in RAID level 1/4/5/6 and changing the

number of active devices in RAID1.

Manage This is for doing things to specific components of an array such

as adding new spares and removing faulty devices.

Misc This is an 'everything else' mode that supports operations on

active arrays, operations on component devices such as erasing

old superblocks, and information gathering operations.

OPTIONS

Options for selecting a mode are:

-A, --assemble

Assemble a pre-existing array.

-B, --build

Build a legacy array without superblocks.

-C, --create

Create a new array.

-F, --follow, --monitor

Select Monitor mode.

-G, --grow

Change the size or shape of an active array.

If a device is given before any options, or if the first option is

--add, --fail, or --remove, then the MANAGE mode is assume. Anything

other than these will cause the Misc mode to be assumed.

Options that are not mode-specific are:

-h, --help

Display general help message or, after one of the above options,

a mode specific help message.

--help-options

Display more detailed help about command line parsing and some

commonly used options.

-V, --version

Print version information for mdadm.

-v, --verbose

Be more verbose about what is happening. This can be used twice

to be extra-verbose. The extra verbosity currently only affects

--detail --scan and --examine --scan.

-q, --quiet

Avoid printing purely informative messages. With this, mdadm

will be silent unless there is something really important to

report.

-b, --brief

Be less verbose. This is used with --detail and --examine.

Using --brief with --verbose gives an intermediate level of ver-

bosity.

-f, --force

Be more forceful about certain operations. See the various

modes of the exact meaning of this option in different contexts.

-c, --config=

Specify the config file. Default is to use

/etc/mdadm/mdadm.conf, or if that is missing, then

/etc/mdadm.conf. If the config file given is partitions then

nothing will be read, but mdadm will act as though the config

file contained exactly DEVICE partitions and will read

/proc/partitions to find a list of devices to scan. If the word

none is given for the config file, then mdadm will act as though

the config file were empty.

-s, --scan

scan config file or /proc/mdstat for missing information. In

general, this option gives mdadm permission to get any missing

information, like component devices, array devices, array iden-

tities, and alert destination from the configuration file:

/etc/mdadm/mdadm.conf. One exception is MISC mode when using

--detail or --stop in which case --scan says to get a list of

array devices from /proc/mdstat.

-e , --metadata=

Declare the style of superblock (raid metadata) to be used. The

default is 0.90 for --create, and to guess for other operations.

The default can be overridden by setting the metadata value for

the CREATE keyword in mdadm.conf.

Options are:

0, 0.90, default

Use the original 0.90 format superblock. This format

limits arrays to 28 componenet devices and limits compo-

nent devices of levels 1 and greater to 2 terabytes.

1, 1.0, 1.1, 1.2

Use the new version-1 format superblock. This has few

restrictions. The different subversion store the

superblock at different locations on the device, either

at the end (for 1.0), at the start (for 1.1) or 4K from

the start (for 1.2).

--homehost=

This will over-ride any HOMEHOST setting in the config file and

provides the identify of the host which should be considered the

home for any arrays.

When creating an array, the homehost will be recorded in the

superblock. For version-1 superblocks, it will be prefixed to

the array name. For version-0.90 superblocks part of the SHA1

hash of the hostname will be stored in the later half of the

UUID.

When reporting information about an array, any array which is

tagged for the given homehost will be reported as such.

When using Auto-Assemble, only arrays tagged for the given home-

host will be assembled.

For create, build, or grow:

-n, --raid-devices=

Specify the number of active devices in the array. This, plus

the number of spare devices (see below) must equal the number of

component-devices (including "missing" devices) that are listed

on the command line for --create. Setting a value of 1 is prob-

ably a mistake and so requires that --force be specified first.

A value of 1 will then be allowed for linear, multipath, raid0

and raid1. It is never allowed for raid4 or raid5.

This number can only be changed using --grow for RAID1 arrays,

and only on kernels which provide necessary support.

-x, --spare-devices=

Specify the number of spare (eXtra) devices in the initial

array. Spares can also be added and removed later. The number

of component devices listed on the command line must equal the

number of raid devices plus the number of spare devices.

-z, --size=

Amount (in Kibibytes) of space to use from each drive in

RAID1/4/5/6. This must be a multiple of the chunk size, and

must leave about 128Kb of space at the end of the drive for the

RAID superblock. If this is not specified (as it normally is

not) the smallest drive (or partition) sets the size, though if

there is a variance among the drives of greater than 1%, a warn-

ing is issued.

This value can be set with --grow for RAID level 1/4/5/6. If the

array was created with a size smaller than the currently active

drives, the extra space can be accessed using --grow. The size

can be given as max which means to choose the largest size that

fits on all current drives.

-c, --chunk=

Specify chunk size of kibibytes. The default is 64.

--rounding=

Specify rounding factor for linear array (==chunk size)

-l, --level=

Set raid level. When used with --create, options are: linear,

raid0, 0, stripe, raid1, 1, mirror, raid4, 4, raid5, 5, raid6,

6, raid10, 10, multipath, mp, faulty. Obviously some of these

are synonymous.

When used with --build, only linear, stripe, raid0, 0, raid1,

multipath, mp, and faulty are valid.

Not yet supported with --grow.

-p, --layout=

This option configures the fine details of data layout for

raid5, and raid10 arrays, and controls the failure modes for

faulty.

The layout of the raid5 parity block can be one of left-asymmet-

ric, left-symmetric, right-asymmetric, right-symmetric, la, ra,

ls, rs. The default is left-symmetric.

When setting the failure mode for faulty the options are: write-

transient, wt, read-transient, rt, write-persistent, wp, read-

persistent, rp, write-all, read-fixable, rf, clear, flush, none.

Each mode can be followed by a number which is used as a period

between fault generation. Without a number, the fault is gener-

ated once on the first relevant request. With a number, the

fault will be generated after that many request, and will con-

tinue to be generated every time the period elapses.

Multiple failure modes can be current simultaneously by using

the "--grow" option to set subsequent failure modes.

"clear" or "none" will remove any pending or periodic failure

modes, and "flush" will clear any persistent faults.

To set the parity with "--grow", the level of the array

("faulty") must be specified before the fault mode is specified.

Finally, the layout options for RAID10 are one of 'n', 'o' or

'p' followed by a small number. The default is 'n2'.

n signals 'near' copies. Multiple copies of one data block are

at similar offsets in different devices.

o signals 'offset' copies. Rather than the chunks being dupli-

cated within a stripe, whole stripes are duplicated but are

rotated by one device so duplicate blocks are on different

devices. Thus subsequent copies of a block are in the next

drive, and are one chunk further down.

f signals 'far' copies (multiple copies have very different off-

sets). See md(4) for more detail about 'near' and 'far'.

The number is the number of copies of each datablock. 2 is nor-

mal, 3 can be useful. This number can be at most equal to the

number of devices in the array. It does not need to divide

evenly into that number (e.g. it is perfectly legal to have an

'n2' layout for an array with an odd number of devices).

--parity=

same as --layout (thus explaining the p of -p).

-b, --bitmap=

Specify a file to store a write-intent bitmap in. The file

should not exist unless --force is also given. The same file

should be provided when assembling the array. If the word

internal is given, then the bitmap is stored with the metadata

on the array, and so is replicated on all devices. If the word

none is given with --grow mode, then any bitmap that is present

is removed.

To help catch typing errors, the filename must contain at least

one slash ('/') if it is a real file (not 'internal' or 'none').

Note: external bitmaps are only known to work on ext2 and ext3.

Storing bitmap files on other filesystems may result in serious

problems.

--bitmap-chunk=

Set the chunksize of the bitmap. Each bit corresponds to that

many Kilobytes of storage. When using a file based bitmap, the

default is to use the smallest size that is atleast 4 and

requires no more than 2^21 chunks. When using an internal

bitmap, the chunksize is automatically determined to make best

use of available space.

-W, --write-mostly

subsequent devices lists in a --build, --create, or --add com-

mand will be flagged as 'write-mostly'. This is valid for RAID1

only and means that the 'md' driver will avoid reading from

these devices if at all possible. This can be useful if mirror-

ing over a slow link.

--write-behind=

Specify that write-behind mode should be enabled (valid for

RAID1 only). If an argument is specified, it will set the maxi-

mum number of outstanding writes allowed. The default value is

256. A write-intent bitmap is required in order to use write-

behind mode, and write-behind is only attempted on drives marked

as write-mostly.

--assume-clean

Tell mdadm that the array pre-existed and is known to be clean.

It can be useful when trying to recover from a major failure as

you can be sure that no data will be affected unless you actu-

ally write to the array. It can also be used when creating a

RAID1 or RAID10 if you want to avoid the initial resync, however

this practice - while normally safe - is not recommended. Use

this ony if you really know what you are doing.

--backup-file=

This is needed when --grow is used to increase the number of

raid-devices in a RAID5 if there are no spare devices avail-

able. See the section below on RAID_DEVICE CHANGES. The file

should be stored on a separate device, not on the raid array

being reshaped.

-N, --name=

Set a name for the array. This is currently only effective when

creating an array with a version-1 superblock. The name is a

simple textual string that can be used to identify array compo-

nents when assembling.

-R, --run

Insist that mdadm run the array, even if some of the components

appear to be active in another array or filesystem. Normally

mdadm will ask for confirmation before including such components

in an array. This option causes that question to be suppressed.

-f, --force

Insist that mdadm accept the geometry and layout specified with-

out question. Normally mdadm will not allow creation of an

array with only one device, and will try to create a raid5 array

with one missing drive (as this makes the initial resync work

faster). With --force, mdadm will not try to be so clever.

-a, --auto{=no,yes,md,mdp,part,p}{NN}

Instruct mdadm to create the device file if needed, possibly

allocating an unused minor number. "md" causes a non-partition-

able array to be used. "mdp", "part" or "p" causes a partition-

able array (2.6 and later) to be used. "yes" requires the named

md device to have a 'standard' format, and the type and minor

number will be determined from this. See DEVICE NAMES below.

The argument can also come immediately after "-a". e.g. "-ap".

If --scan is also given, then any auto= entries in the config

file will over-ride the --auto instruction given on the command

line.

For partitionable arrays, mdadm will create the device file for

the whole array and for the first 4 partitions. A different

number of partitions can be specified at the end of this option

(e.g. --auto=p7). If the device name ends with a digit, the

partition names add a 'p', and a number, e.g. "/dev/home1p3".

If there is no trailing digit, then the partition names just

have a number added, e.g. "/dev/scratch3".

If the md device name is in a 'standard' format as described in

DEVICE NAMES, then it will be created, if necessary, with the

appropriate number based on that name. If the device name is

not in one of these formats, then a unused minor number will be

allocated. The minor number will be considered unused if there

is no active array for that number, and there is no entry in

/dev for that number and with a non-standard name.

--symlink=no

Normally when --auto causes mdadm to create devices in /dev/md/

it will also create symlinks from /dev/ with names starting with

md or md_. Use --symlink=no to suppress this, or --symlink=yes

to enforce this even if it is suppressing mdadm.conf.

For assemble:

-u, --uuid=

uuid of array to assemble. Devices which don't have this uuid

are excluded

-m, --super-minor=

Minor number of device that array was created for. Devices

which don't have this minor number are excluded. If you create

an array as /dev/md1, then all superblocks will contain the

minor number 1, even if the array is later assembled as

/dev/md2.

Giving the literal word "dev" for --super-minor will cause mdadm

to use the minor number of the md device that is being assem-

bled. e.g. when assembling /dev/md0, will look for super blocks

with a minor number of 0.

-N, --name=

Specify the name of the array to assemble. This must be the

name that was specified when creating the array. It must either

match then name stored in the superblock exactly, or it must

match with the current homehost is added to the start of the

given name.

-f, --force

Assemble the array even if some superblocks appear out-of-date

-R, --run

Attempt to start the array even if fewer drives were given than

were present last time the array was active. Normally if not

all the expected drives are found and --scan is not used, then

the array will be assembled but not started. With --run an

attempt will be made to start it anyway.

--no-degraded

This is the reverse of --run in that it inhibits the started if

array unless all expected drives are present. This is only

needed with --scan and can be used if you physical connections

to devices are not as reliable as you would like.

-a, --auto{=no,yes,md,mdp,part}

See this option under Create and Build options.

-b, --bitmap=

Specify the bitmap file that was given when the array was cre-

ated. If an array has an internal bitmap, there is no need to

specify this when assembling the array.

--backup-file=

If --backup-file was used to grow the number of raid-devices in

a RAID5, and the system crashed during the critical section,

then the same --backup-file must be presented to --assemble to

allow possibly corrupted data to be restored.

-U, --update=

Update the superblock on each device while assembling the array.

The argument given to this flag can be one of sparc2.2, sum-

maries, uuid, name, homehost, resync, byteorder, or super-minor.

The sparc2.2 option will adjust the superblock of an array what

was created on a Sparc machine running a patched 2.2 Linux ker-

nel. This kernel got the alignment of part of the superblock

wrong. You can use the --examine --sparc2.2 option to mdadm to

see what effect this would have.

The super-minor option will update the preferred minor field on

each superblock to match the minor number of the array being

assembled. This can be useful if --examine reports a different

"Preferred Minor" to --detail. In some cases this update will

be performed automatically by the kernel driver. In particular

the update happens automatically at the first write to an array

with redundancy (RAID level 1 or greater) on a 2.6 (or later)

kernel.

The uuid option will change the uuid of the array. If a UUID is

given with the "--uuid" option that UUID will be used as a new

UUID and will NOT be used to help identify the devices in the

array. If no "--uuid" is given, a random uuid is chosen.

The name option will change the name of the array as stored in

the superblock. This is only supported for version-1

superblocks.

The homehost option will change the homehost as recorded in the

superblock. For version-0 superblocks, this is the same as

updating the UUID. For version-1 superblocks, this involves

updating the name.

The resync option will cause the array to be marked dirty mean-

ing that any redundancy in the array (e.g. parity for raid5,

copies for raid1) may be incorrect. This will cause the raid

system to perform a "resync" pass to make sure that all redun-

dant information is correct.

The byteorder option allows arrays to be moved between machines

with different byte-order. When assembling such an array for

the first time after a move, giving --update=byteorder will

cause mdadm to expect superblocks to have their byteorder

reversed, and will correct that order before assembling the

array. This is only valid with original (Version 0.90)

superblocks.

The summaries option will correct the summaries in the

superblock. That is the counts of total, working, active,

failed, and spare devices.

--auto-update-homehost

This flag is only meaning with auto-assembly (see discussion

below). In that situation, if no suitable arrays are found for

this homehost, mdadm will recan for any arrays at all and will

assemble them and update the homehost to match the current host.

For Manage mode:

-a, --add

hot-add listed devices.

--re-add

re-add a device that was recently removed from an array.

-r, --remove

remove listed devices. They must not be active. i.e. they

should be failed or spare devices.

-f, --fail

mark listed devices as faulty.

--set-faulty

same as --fail.

Each of these options require that the first device list is the array

to be acted upon and the remainder are component devices to be added,

removed, or marked as fault. Several different operations can be spec-

ified for different devices, e.g.

mdadm /dev/md0 --add /dev/sda1 --fail /dev/sdb1 --remove /dev/sdb1

Each operation applies to all devices listed until the next operations.

If an array is using a write-intent bitmap, then devices which have

been removed can be re-added in a way that avoids a full reconstruction

but instead just updated the blocks that have changed since the device

was removed. For arrays with persistent metadata (superblocks) this is

done automatically. For arrays created with --build mdadm needs to be

told that this device we removed recently with --re-add.

Devices can only be removed from an array if they are not in active

use. i.e. that must be spares or failed devices. To remove an active

device, it must be marked as faulty first.

For Misc mode:

-Q, --query

Examine a device to see (1) if it is an md device and (2) if it

is a component of an md array. Information about what is dis-

covered is presented.

-D, --detail

Print detail of one or more md devices.

-E, --examine

Print content of md superblock on device(s).

--sparc2.2

If an array was created on a 2.2 Linux kernel patched with RAID

support, the superblock will have been created incorrectly, or

at least incompatibly with 2.4 and later kernels. Using the

--sparc2.2 flag with --examine will fix the superblock before

displaying it. If this appears to do the right thing, then the

array can be successfully assembled using --assemble

--update=sparc2.2.

-X, --examine-bitmap

Report information about a bitmap file.

-R, --run

start a partially built array.

-S, --stop

deactivate array, releasing all resources.

-o, --readonly

mark array as readonly.

-w, --readwrite

mark array as readwrite.

--zero-superblock

If the device contains a valid md superblock, the block is over-

written with zeros. With --force the block where the superblock

would be is over-written even if it doesn't appear to be valid.

-t, --test

When used with --detail, the exit status of mdadm is set to

reflect the status of the device.

For Monitor mode:

-m, --mail

Give a mail address to send alerts to.

-p, --program, --alert

Give a program to be run whenever an event is detected.

-y, --syslog

Cause all events to be reported through 'syslog'. The messages

have facility of 'daemon' and varying priorities.

-d, --delay

Give a delay in seconds. mdadm polls the md arrays and then

waits this many seconds before polling again. The default is 60

seconds.

-f, --daemonise

Tell mdadm to run as a background daemon if it decides to moni-

tor anything. This causes it to fork and run in the child, and

to disconnect form the terminal. The process id of the child is

written to stdout. This is useful with --scan which will only

continue monitoring if a mail address or alert program is found

in the config file.

-i, --pid-file

When mdadm is running in daemon mode, write the pid of the dae-

mon process to the specified file, instead of printing it on

standard output.

-1, --oneshot

Check arrays only once. This will generate NewArray events and

more significantly DegradedArray and SparesMissing events. Run-

ning

mdadm --monitor --scan -1

from a cron script will ensure regular notification of any

degraded arrays.

-t, --test

Generate a TestMessage alert for every array found at startup.

This alert gets mailed and passed to the alert program. This

can be used for testing that alert message do get through suc-

cessfully.

ASSEMBLE MODE

Usage: mdadm --assemble md-device options-and-component-devices...

Usage: mdadm --assemble --scan md-devices-and-options...

Usage: mdadm --assemble --scan options...

This usage assembles one or more raid arrays from pre-existing compo-

nents. For each array, mdadm needs to know the md device, the identity

of the array, and a number of component-devices. These can be found in

a number of ways.

In the first usage example (without the --scan) the first device given

is the md device. In the second usage example, all devices listed are

treated as md devices and assembly is attempted. In the third (where

no devices are listed) all md devices that are listed in the configura-

tion file are assembled.

If precisely one device is listed, but --scan is not given, then mdadm

acts as though --scan was given and identify information is extracted

from the configuration file.

The identity can be given with the --uuid option, with the --super-

minor option, can be found in the config file, or will be taken from

the super block on the first component-device listed on the command

line.

Devices can be given on the --assemble command line or in the config

file. Only devices which have an md superblock which contains the right

identity will be considered for any array.

The config file is only used if explicitly named with --config or

requested with (a possibly implicit) --scan. In the later case,

/etc/mdadm/mdadm.conf is used.

If --scan is not given, then the config file will only be used to find

the identity of md arrays.

Normally the array will be started after it is assembled. However if

--scan is not given and insufficient drives were listed to start a com-

plete (non-degraded) array, then the array is not started (to guard

against usage errors). To insist that the array be started in this

case (as may work for RAID1, 4, 5, 6, or 10), give the --run flag.

If an auto option is given, either on the command line (--auto) or in

the configuration file (e.g. auto=part), then mdadm will create the md

device if necessary or will re-create it if it doesn't look usable as

it is.

This can be useful for handling partitioned devices (which don't have a

stable device number - it can change after a reboot) and when using

"udev" to manage your /dev tree (udev cannot handle md devices because

of the unusual device initialisation conventions).

If the option to "auto" is "mdp" or "part" or (on the command line

only) "p", then mdadm will create a partitionable array, using the

first free one that is not in use, and does not already have an entry

in /dev (apart from numeric /dev/md* entries).

If the option to "auto" is "yes" or "md" or (on the command line) noth-

ing, then mdadm will create a traditional, non-partitionable md array.

It is expected that the "auto" functionality will be used to create

device entries with meaningful names such as "/dev/md/home" or

"/dev/md/root", rather than names based on the numerical array number.

When using this option to create a partitionable array, the device

files for the first 4 partitions are also created. If a different num-

ber is required it can be simply appended to the auto option. e.g.

"auto=part8". Partition names are created by appending a digit string

to the device name, with an intervening "p" if the device name ends

with a digit.

The --auto option is also available in Build and Create modes. As

those modes do not use a config file, the "auto=" config option does

not apply to these modes.

Auto Assembly

When --assemble is used with --scan and no devices are listed, mdadm

will first attempt to assemble all the arrays listed in the config

file.

If a homehost has been specified (either in the config file or on the

command line), mdadm will look further for possible arrays and will try

to assemble anything that it finds which is tagged as belonging to the

given homehost. This is the only situation where mdadm will assemble

arrays without being given specific device name or identify information

for the array.

If mdadm finds a consistent set of devices that look like they should

comprise an array, and if the superblock is tagged as belonging to the

given home host, it will automatically choose a device name and try to

assemble the array. If the array uses version-0.90 metadata, then the

minor number as recorded in the superblock is used to create a name in

/dev/md/ so for example /dev/md/3. If the array uses version-1 meta-

data, then the name from the superblock is used to similarly create a

name in /dev/md. The name will have any 'host' prefix stripped first.

If mdadm cannot find any array for the given host at all, and if

--auto-update-homehost is given, then mdadm will search again for any

array (not just an array created for this host) and will assemble each

assuming --update=homehost. This will change the host tag in the

superblock so that on the next run, these arrays will be found without

the second pass. The intention of this feature is to support transi-

tioning a set of md arrays to using homehost tagging.

The reason for requiring arrays to be tagged with the homehost for auto

assembly is to guard against problems that can arise when moving

devices from one host to another.

BUILD MODE

Usage: mdadm --build device --chunk=X --level=Y --raid-devices=Z

devices

This usage is similar to --create. The difference is that it creates

an array without a superblock. With these arrays there is no difference

between initially creating the array and subsequently assembling the

array, except that hopefully there is useful data there in the second

case.

The level may raid0, linear, multipath, or faulty, or one of their syn-

onyms. All devices must be listed and the array will be started once

complete.

CREATE MODE

Usage: mdadm --create device --chunk=X --level=Y

--raid-devices=Z devices

This usage will initialise a new md array, associate some devices with

it, and activate the array.

If the --auto option is given (as described in more detail in the sec-

tion on Assemble mode), then the md device will be created with a suit-

able device number if necessary.

As devices are added, they are checked to see if they contain raid

superblocks or filesystems. They are also checked to see if the vari-

ance in device size exceeds 1%.

If any discrepancy is found, the array will not automatically be run,

though the presence of a --run can override this caution.

To create a "degraded" array in which some devices are missing, simply

give the word "missing" in place of a device name. This will cause

mdadm to leave the corresponding slot in the array empty. For a RAID4

or RAID5 array at most one slot can be "missing"; for a RAID6 array at

most two slots. For a RAID1 array, only one real device needs to be

given. All of the others can be "missing".

When creating a RAID5 array, mdadm will automatically create a degraded

array with an extra spare drive. This is because building the spare

into a degraded array is in general faster than resyncing the parity on

a non-degraded, but not clean, array. This feature can be over-ridden

with the --force option.

When creating an array with version-1 metadata a name for the host is

required. If this is not given with the --name option, mdadm will

chose a name based on the last component of the name of the device

being created. So if /dev/md3 is being created, then the name 3 will

be chosen. If /dev/md/home is being created, then the name home will

be used.

The General Management options that are valid with --create are:

--run insist on running the array even if some devices look like they

might be in use.

--readonly

start the array readonly - not supported yet.

MANAGE MODE

Usage: mdadm device options... devices...

This usage will allow individual devices in an array to be failed,

removed or added. It is possible to perform multiple operations with

on command. For example:

mdadm /dev/md0 -f /dev/hda1 -r /dev/hda1 -a /dev/hda1

will firstly mark /dev/hda1 as faulty in /dev/md0 and will then remove

it from the array and finally add it back in as a spare. However only

one md array can be affected by a single command.

MISC MODE

Usage: mdadm options ... devices ...

MISC mode includes a number of distinct operations that operate on dis-

tinct devices. The operations are:

--query

The device is examined to see if it is (1) an active md array,

or (2) a component of an md array. The information discovered

is reported.

--detail

The device should be an active md device. mdadm will display a

detailed description of the array. --brief or --scan will cause

the output to be less detailed and the format to be suitable for

inclusion in /etc/mdadm/mdadm.conf. The exit status of mdadm

will normally be 0 unless mdadm failed to get useful information

about the device(s). However if the --test option is given,

then the exit status will be:

0 The array is functioning normally.

1 The array has at least one failed device.

2 The array has multiple failed devices and hence is unus-

able (raid4 or raid5).

4 There was an error while trying to get information about

the device.

--examine

The device should be a component of an md array. mdadm will

read the md superblock of the device and display the contents.

If --brief is given, or --scan then multiple devices that are

components of the one array are grouped together and reported in

a single entry suitable for inclusion in /etc/mdadm/mdadm.conf.

Having --scan without listing any devices will cause all devices

listed in the config file to be examined.

--stop The devices should be active md arrays which will be deacti-

vated, as long as they are not currently in use.

--run This will fully activate a partially assembled md array.

--readonly

This will mark an active array as read-only, providing that it

is not currently being used.

--readwrite

This will change a readonly array back to being read/write.

--scan For all operations except --examine, --scan will cause the oper-

ation to be applied to all arrays listed in /proc/mdstat. For

--examine, --scan causes all devices listed in the config file

to be examined.

MONITOR MODE

Usage: mdadm --monitor options... devices...

This usage causes mdadm to periodically poll a number of md arrays and

to report on any events noticed. mdadm will never exit once it decides

that there are arrays to be checked, so it should normally be run in

the background.

As well as reporting events, mdadm may move a spare drive from one

array to another if they are in the same spare-group and if the desti-

nation array has a failed drive but no spares.

If any devices are listed on the command line, mdadm will only monitor

those devices. Otherwise all arrays listed in the configuration file

will be monitored. Further, if --scan is given, then any other md

devices that appear in /proc/mdstat will also be monitored.

The result of monitoring the arrays is the generation of events. These

events are passed to a separate program (if specified) and may be

mailed to a given E-mail address.

When passing event to program, the program is run once for each event

and is given 2 or 3 command-line arguments. The first is the name of

the event (see below). The second is the name of the md device which

is affected, and the third is the name of a related device if relevant,

such as a component device that has failed.

If --scan is given, then a program or an E-mail address must be speci-

fied on the command line or in the config file. If neither are avail-

able, then mdadm will not monitor anything. Without --scan mdadm will

continue monitoring as long as something was found to monitor. If no

program or email is given, then each event is reported to stdout.

The different events are:

DeviceDisappeared

An md array which previously was configured appears to no

longer be configured. (syslog priority: Critical)

If mdadm was told to monitor an array which is RAID0 or Lin-

ear, then it will report DeviceDisappeared with the extra

information Wrong-Level. This is because RAID0 and Linear

do not support the device-failed, hot-spare and resync oper-

ations which are monitored.

RebuildStarted

An md array started reconstruction. (syslog priority: Warn-

ing)

RebuildNN

Where NN is 20, 40, 60, or 80, this indicates that rebuild

has passed that many percentage of the total. (syslog prior-

ity: Warning)

RebuildFinished

An md array that was rebuilding, isn't any more, either

because it finished normally or was aborted. (syslog prior-

ity: Warning)

Fail An active component device of an array has been marked as

faulty. (syslog priority: Critical)

FailSpare

A spare component device which was being rebuilt to replace

a faulty device has failed. (syslog priority: Critial)

SpareActive

A spare component device which was being rebuilt to replace

a faulty device has been successfully rebuilt and has been

made active. (syslog priority: Info)

NewArray

A new md array has been detected in the /proc/mdstat file.

(syslog priority: Info)

DegradedArray

A newly noticed array appears to be degraded. This message

is not generated when mdadm notices a drive failure which

causes degradation, but only when mdadm notices that an

array is degraded when it first sees the array. (syslog

priority: Critial)

MoveSpare

A spare drive has been moved from one array in a spare-group

to another to allow a failed drive to be replaced. (syslog

priority: Info)

SparesMissing

If mdadm has been told, via the config file, that an array

should have a certain number of spare devices, and mdadm

detects that it has fewer that this number when it first

sees the array, it will report a SparesMissing message.

(syslog priority: Warning)

TestMessage

An array was found at startup, and the --test flag was

given. (syslog priority: Info)

Only Fail , FailSpare , DegradedArray , SparesMissing , and TestMessage

cause Email to be sent. All events cause the program to be run. The

program is run with two or three arguments, they being the event name,

the array device and possibly a second device.

Each event has an associated array device (e.g. /dev/md1) and possibly

a second device. For Fail, FailSpare, and SpareActive the second

device is the relevant component device. For MoveSpare the second

device is the array that the spare was moved from.

For mdadm to move spares from one array to another, the different

arrays need to be labelled with the same spare-group in the configura-

tion file. The spare-group name can be any string. It is only neces-

sary that different spare groups use different names.

When mdadm detects that an array which is in a spare group has fewer

active devices than necessary for the complete array, and has no spare

devices, it will look for another array in the same spare group that

has a full complement of working drive and a spare. It will then

attempt to remove the spare from the second drive and add it to the

first. If the removal succeeds but the adding fails, then it is added

back to the original array.

GROW MODE

The GROW mode is used for changing the size or shape of an active

array. For this to work, the kernel must support the necessary change.

Various types of growth are being added during 2.6 development, includ-

ing restructuring a raid5 array to have more active devices.

Currently the only support available is to

o change the "size" attribute for RAID1, RAID5 and RAID6.

o increase the "raid-disks" attribute of RAID1 and RAID5.

o add a write-intent bitmap to any array which support these bitmaps,

or remove a write-intent bitmap from such an array.

SIZE CHANGES

Normally when an array is built the "size" it taken from the smallest

of the drives. If all the small drives in an arrays are, one at a

time, removed and replaced with larger drives, then you could have an

array of large drives with only a small amount used. In this situa-

tion, changing the "size" with "GROW" mode will allow the extra space

to start being used. If the size is increased in this way, a "resync"

process will start to make sure the new parts of the array are synchro-

nised.

Note that when an array changes size, any filesystem that may be stored

in the array will not automatically grow to use the space. The

filesystem will need to be explicitly told to use the extra space.

RAID-DEVICES CHANGES

A RAID1 array can work with any number of devices from 1 upwards

(though 1 is not very useful). There may be times which you want to

increase or decrease the number of active devices. Note that this is

different to hot-add or hot-remove which changes the number of inactive

devices.

When reducing the number of devices in a RAID1 array, the slots which

are to be removed from the array must already be vacant. That is, the

devices that which were in those slots must be failed and removed.

When the number of devices is increased, any hot spares that are

present will be activated immediately.

Increasing the number of active devices in a RAID5 is much more effort.

Every block in the array will need to be read and written back to a new

location. From 2.6.17, the Linux Kernel is able to do this safely,

including restart and interrupted "reshape".

When relocating the first few stripes on a raid5, it is not possible to

keep the data on disk completely consistent and crash-proof. To pro-

vide the required safety, mdadm disables writes to the array while this

"critical section" is reshaped, and takes a backup of the data that is

in that section. This backup is normally stored in any spare devices

that the array has, however it can also be stored in a separate file

specified with the --backup-file option. If this option is used, and

the system does crash during the critical period, the same file must be

passed to --assemble to restore the backup and reassemble the array.

BITMAP CHANGES

A write-intent bitmap can be added to, or removed from, an active

array. Either internal bitmaps, or bitmaps stored in a separate file

can be added. Note that if you add a bitmap stored in a file which is

in a filesystem that is on the raid array being affected, the system

will deadlock. The bitmap must be on a separate filesystem.

EXAMPLES

mdadm --query /dev/name-of-device

This will find out if a given device is a raid array, or is part of

one, and will provide brief information about the device.

mdadm --assemble --scan

This will assemble and start all arrays listed in the standard config

file file. This command will typically go in a system startup file.

mdadm --stop --scan

This will shut down all array that can be shut down (i.e. are not cur-

rently in use). This will typically go in a system shutdown script.

mdadm --follow --scan --delay=120

If (and only if) there is an Email address or program given in the

standard config file, then monitor the status of all arrays listed in

that file by polling them ever 2 minutes.

mdadm --create /dev/md0 --level=1 --raid-devices=2 /dev/hd[ac]1

Create /dev/md0 as a RAID1 array consisting of /dev/hda1 and /dev/hdc1.

echo 'DEVICE /dev/hd*[0-9] /dev/sd*[0-9]' > mdadm.conf

mdadm --detail --scan >> mdadm.conf

This will create a prototype config file that describes currently

active arrays that are known to be made from partitions of IDE or SCSI

drives. This file should be reviewed before being used as it may con-

tain unwanted detail.

echo 'DEVICE /dev/hd[a-z] /dev/sd*[a-z]' > mdadm.conf

mdadm --examine --scan --config=mdadm.conf >> mdadm.conf This will

find what arrays could be assembled from existing IDE and SCSI whole

drives (not partitions) and store the information is the format of a

config file. This file is very likely to contain unwanted detail, par-

ticularly the devices= entries. It should be reviewed and edited

before being used as an actual config file.

mdadm --examine --brief --scan --config=partitions

mdadm -Ebsc partitions

Create a list of devices by reading /proc/partitions, scan these for

RAID superblocks, and printout a brief listing of all that was found.

mdadm -Ac partitions -m 0 /dev/md0

Scan all partitions and devices listed in /proc/partitions and assemble

/dev/md0 out of all such devices with a RAID superblock with a minor

number of 0.

mdadm --monitor --scan --daemonise > /var/run/mdadm

If config file contains a mail address or alert program, run mdadm in

the background in monitor mode monitoring all md devices. Also write

pid of mdadm daemon to /var/run/mdadm.

mdadm --create --help

Provide help about the Create mode.

mdadm --config --help

Provide help about the format of the config file.

mdadm --help

Provide general help.

FILES

/proc/mdstat

If you're using the /proc filesystem, /proc/mdstat lists all active md

devices with information about them. mdadm uses this to find arrays

when --scan is given in Misc mode, and to monitor array reconstruction

on Monitor mode.

/etc/mdadm/mdadm.conf

The config file lists which devices may be scanned to see if they con-

tain MD super block, and gives identifying information (e.g. UUID)

about known MD arrays. See mdadm.conf(5) for more details.

DEVICE NAMES

While entries in the /dev directory can have any format you like, mdadm

has an understanding of 'standard' formats which it uses to guide its

behaviour when creating device files via the --auto option.

The standard names for non-partitioned arrays (the only sort of md

array available in 2.4 and earlier) either of

/dev/mdNN

/dev/md/NN

where NN is a number. The standard names for partitionable arrays (as

available from 2.6 onwards) is one of

/dev/md/dNN

/dev/md_dNN

Partition numbers should be indicated by added "pMM" to these, thus

"/dev/md/d1p2".

NOTE

mdadm was previously known as mdctl.

mdadm is completely separate from the raidtools package, and does not

use the /etc/raidtab configuration file at all.

SEE ALSO

For information on the various levels of RAID, check out:

http://ostenfeld.dk/~jakob/Software-RAID.HOWTO/

The latest version of mdadm should always be available from

http://www.kernel.org/pub/linux/utils/raid/mdadm/

mdadm.conf(5), md(4).

raidtab(5), raid0run(8), raidstop(8), mkraid(8).

v2.5.6 MDADM(8)

超蛇足

あと、ぜんぜん関係ないけどデフォルトロケールがUTF-8になっててちょっとびっくり。

$ env | grep LANG

LANG=ja_JP.UTF-8

Debian etch は ja_JP.EUC-JP じゃなくて ja_JP.UTF-8 らしい。sarge からの upgrade ばっかりしてたから気づかなかった。

Ref. [debian-devel:16276] etchイン ストーラでUTF-8 に移行する予定です

SparesMissing イベント (追記: 2007-12-14)

OS を再起動するたびにこんなメールが届く。

いつからだっけ……

From root@hogehoge.localdomain Thu Dec 13 21:04:07 2007

Return-path: <root@hogehoge.localdomain>

Envelope-to: root@hogehoge.localdomain

Delivery-date: Thu, 13 Dec 2007 21:04:07 +0900

Received: from root by hogehoge.localdomain with local (Exim 4.63)

(envelope-from <root@hogehoge.localdomain>)

id 1J2mnP-0000q4-BU

for root@hogehoge.localdomain; Thu, 13 Dec 2007 21:04:07 +0900

From: mdadm monitoring <root@hogehoge.localdomain>

To: root@hogehoge.localdomain

Subject: SparesMissing event on /dev/md2:hogehoge

Message-Id: <E1J2mnP-0000q4-BU@hogehoge.localdomain>

Date: Thu, 13 Dec 2007 21:04:07 +0900

Status: RO

This is an automatically generated mail message from mdadm

running on hogehoge

A SparesMissing event had been detected on md device /dev/md2.

Faithfully yours, etc.

P.S. The /proc/mdstat file currently contains the following:

Personalities : [raid1]

md2 : active raid1 sda3[0] sdb3[1]

310126720 blocks [2/2] [UU]

md1 : active raid1 sda2[0] sdb2[1]

1951808 blocks [2/2] [UU]

md0 : active raid1 sda1[0] sdb1[1]

489856 blocks [2/2] [UU]

unused devices: <none>

From root@hogehoge.localdomain Thu Dec 13 21:04:07 2007

Return-path: <root@hogehoge.localdomain>

Envelope-to: root@hogehoge.localdomain

Delivery-date: Thu, 13 Dec 2007 21:04:07 +0900

Received: from root by hogehoge.localdomain with local (Exim 4.63)

(envelope-from <root@hogehoge.localdomain>)

id 1J2mnP-0000qb-L9

for root@hogehoge.localdomain; Thu, 13 Dec 2007 21:04:07 +0900

From: mdadm monitoring <root@hogehoge.localdomain>

To: root@hogehoge.localdomain

Subject: SparesMissing event on /dev/md0:hogehoge

Message-Id: <E1J2mnP-0000qb-L9@hogehoge.localdomain>

Date: Thu, 13 Dec 2007 21:04:07 +0900

Status: RO

This is an automatically generated mail message from mdadm

running on hogehoge

A SparesMissing event had been detected on md device /dev/md0.

Faithfully yours, etc.

P.S. The /proc/mdstat file currently contains the following:

Personalities : [raid1]

md2 : active raid1 sda3[0] sdb3[1]

310126720 blocks [2/2] [UU]

md1 : active raid1 sda2[0] sdb2[1]

1951808 blocks [2/2] [UU]

md0 : active raid1 sda1[0] sdb1[1]

489856 blocks [2/2] [UU]

unused devices: <none>

From root@hogehoge.localdomain Thu Dec 13 21:01:32 2007

Return-path: <root@hogehoge.localdomain>

Envelope-to: root@hogehoge.localdomain

Delivery-date: Thu, 13 Dec 2007 21:01:32 +0900

Received: from root by hogehoge.localdomain with local (Exim 4.63)

(envelope-from <root@hogehoge.localdomain>)

id 1J2mnP-0000qM-H0

for root@hogehoge.localdomain; Thu, 13 Dec 2007 21:04:07 +0900

From: mdadm monitoring <root@hogehoge.localdomain>

To: root@hogehoge.localdomain

Subject: SparesMissing event on /dev/md1:hogehoge

Message-Id: <E1J2mnP-0000qM-H0@hogehoge.localdomain>

Date: Thu, 13 Dec 2007 21:04:07 +0900

Status: RO

This is an automatically generated mail message from mdadm

running on hogehoge

A SparesMissing event had been detected on md device /dev/md1.

Faithfully yours, etc.

P.S. The /proc/mdstat file currently contains the following:

Personalities : [raid1]

md2 : active raid1 sda3[0] sdb3[1]

310126720 blocks [2/2] [UU]

md1 : active raid1 sda2[0] sdb2[1]

1951808 blocks [2/2] [UU]

md0 : active raid1 sda1[0] sdb1[1]

489856 blocks [2/2] [UU]

unused devices: <none>

「Linux RAID入門」 によると

イベント名: SparesMissing

Syslogレベル: Info

意味: 設定ファイルに記載されているよりも少ないスペアドライブ数であることを検出しました

ということらしい。そんなに気にしなくていいのかな。

一応、設定ファイルを見ておく。

hogehoge:~# cat /etc/mdadm/mdadm.conf

# mdadm.conf

#

# Please refer to mdadm.conf(5) for information about this file.

#

# by default, scan all partitions (/proc/partitions) for MD superblocks.

# alternatively, specify devices to scan, using wildcards if desired.

DEVICE partitions

# auto-create devices with Debian standard permissions

CREATE owner=root group=disk mode=0660 auto=yes

# automatically tag new arrays as belonging to the local system

HOMEHOST <system>

# instruct the monitoring daemon where to send mail alerts

MAILADDR root

# definitions of existing MD arrays

ARRAY /dev/md0 level=raid1 num-devices=1 UUID=8bba7643:fd066bd7:c4dd6cdd:ed8a149f

spares=1

ARRAY /dev/md1 level=raid1 num-devices=1 UUID=142686ef:43298491:b6bdce52:8a7fc717

spares=1

ARRAY /dev/md2 level=raid1 num-devices=1 UUID=9705e4d8:ff326a8a:0820ec24:30988c0c

spares=1

# This file was auto-generated on Sun, 23 Sep 2007 16:09:18 +0000

# by mkconf $Id: mkconf 261 2006-11-09 13:32:35Z madduck $

tags: zlashdot Linux Debian Linux Raid amd64

Posted by NI-Lab. (@nilab)